You need a solid foundation: good survey questions to collect meaningful and actionable survey insights. This may seem obvious, but it’s rather important for ensuring the overall success of your survey.

Well-written survey questions solicit accurate and actionable insights that can guide product development, direct marketing campaigns and improve customer journeys.

Learn how to write good survey questions for your next research project.

What makes good survey questions?

Good survey questions are clear, neutral, unbiased and relevant to your research objective.

Survey questions are crucial to any survey, as they help gather valuable insights and data from respondents. Crafting effective survey questions is essential for obtaining accurate and actionable information.

Good questions are dependent on your objective

Good survey questions are relevant to your goals and objectives. For example, some help you to understand customers’ satisfaction with your company, products or service. The Net Promoter Score® (NPS®) question, “On a scale of 0 to 10, how likely is it that you would recommend this company to a friend?”, is an excellent survey question if you’re measuring customer satisfaction and loyalty.

However, an employee NPS (eNPS) survey question would be a better fit if your objective is to measure employee engagement.

Good surveys combine qualitative and quantitative questions

Combining qualitative open-ended questions with quantitative closed-ended questions can enhance survey insights by providing detailed personal feedback and measurable data. By understanding the various types of survey questions and how to use them effectively, you can create surveys that yield valuable insights and drive informed decision-making.

60 good survey question examples

Your research objective is the difference between a ‘good’ and a ‘bad’ survey question. Here are 60 good survey question examples based on the situation, including customer satisfaction, employee engagement and post-event.

Good customer service survey questions

The use of good customer service survey questions, which should be clear and straightforward, can accomplish two things: solicit honest customer feedback and reinforce your company brand. Here are 12 customer service questions to jump-start your customer support survey.

- How satisfied are you with the overall customer service experience?

- How did you contact our customer support team?

- How easy was it to get in touch with our customer service?

- How satisfied are you with the response time of our customer support team?

- How would you rate the professionalism of our customer support team?

- How knowledgeable did you find our customer service representatives?

- How well did our customer support team resolve your issue?

- What could our customer support team have done better?

- How likely is it that you would recommend our customer support to others?

- How likely is it that you would recommend our company to a friend or colleague?

- Do you have any other comments or suggestions for our customer support team?

- Please provide any additional comments or suggestions that you may have.

Good employee survey questions

Employee-centric survey questions deserve the same care and attention as customer-facing questions. Employees are just as likely as customers to abandon and ‘straightline’ surveys.

These responses can have a dramatic impact on the accuracy of your employee engagement and satisfaction data and mislead employee initiatives. You can avoid this outcome by asking these Likert scale and dichotomous employee survey questions to gauge employee engagement and satisfaction.

- I am satisfied that I have opportunities to apply my expertise.

- My organisation is dedicated to my professional development.

- I am satisfied with my overall job security.

- How easy is it to get help from your supervisor when you need it?

- How understanding are your colleagues?

- How reliable is your supervisor?

- When I speak up at work, my opinion is valued.

- How well does your supervisor facilitate your professional growth?

- How realistic were the expectations that were set for you?

- Overall, how fairly were you treated?

- How involved are employees in setting the company’s objectives?

- How well do the members of your department work together to achieve a common goal?

Good user experience (UX) survey questions

Good UX surveys use various question types to understand the customers’ experience with your product. Likert scale questions gather quantitative data, while open-ended questions allow you to understand customer sentiment.

Use these UX survey questions to evaluate user experience and product usability. You should bear in mind the fact that you may wish to include high-resolution images with the survey question.

- How would you rate the overall user experience regarding our product?

- How would you describe our product in a single word or sentence?

- How easy was it to navigate through our product?

- How visually appealing do you find our product?

- Which features do you find most useful? (Select all that apply.)

- What do you like most about our product?

- How responsive is our product?

- What improvements would you suggest for our product?

- How likely is it that you would recommend our product to a friend or colleague?

- How satisfied are you with the user interface of our product?

- How satisfied are you with the ability to collaborate with other users on the website?

- How often do you use our product?

Good survey questions after an event

Post-event feedback questions are deployed immediately after an event. For this reason, questions are often closed-ended and concise as it’s likely that the survey will be completed on mobile devices, which encourages high response rates.

Consider these survey questions after an event to collect feedback from participants.

- Overall, how would you rate the event?

- Overall, were you satisfied or dissatisfied with the event?

- How could future events be improved? (Select all that apply.)

- Was the event length too long, too short or about right?

- How much did the event live up to your expectations?

- How likely is it that you would recommend this event to a friend/colleague?

- Prior to the event, how much of the information that you needed did you receive?

- How useful was the information presented at the event?

- How helpful were the staff at the event?

- How likely is it that you would attend this event again in the future?

- How would you rate the vendors at the event?

- How clearly was the information presented at the event?

Good quantitative survey questions

Closed-ended questions are among the most accurate ways to gather quantitative information. These questions limit a user’s response options to pre-selected multiple choice answers, making them easier to analyse. You aren’t restricted to closed-ended questions, though. In many cases, like the NPS survey question, it is best practice to follow up with an open-ended question to understand the respondent’s answer.

Here are 12 examples of quantitative survey questions for a variety of research objectives.

- How likely is it that you would recommend this company to a friend as a place to work?

- How likely is it that you would recommend this company to a friend or colleague?

- How easy was it for you to complete this action?

- Overall, how satisfied are you with the speed of our customer service?

- How would you rate this employee’s performance?

- When was the last time you used this product category?

- Will you be attending the event?

- Overall, how well does our website meet your needs?

- Overall, how would you rate your purchase experience today?

- How would you rate each aspect of your experience?

- How likely is it that you would purchase any of our products again?

- In a typical week, how often do you feel stressed at work?

400+ survey, questionnaire and form templates

Get real results with our expert-written survey templates.

Types of survey questions

Different types of survey questions, such as multiple choice, rating scale and open-ended questions, each serve unique purposes and can provide a comprehensive understanding of the respondents’ opinions and experiences.

Consider the following question types as you scrutinise your survey questions and their ability to gather the information that you need in order to succeed. Nominal questions, for instance, present respondents with multiple answer choices that do not overlap, ensuring clarity and precision in responses.

1. Dichotomous questions

Dichotomous questions are binary “yes” or “no” survey questions, restricting respondents to two straightforward answer options. This type of survey question is quantitative and can be followed up with open-ended questions.

For example, a human resources professional may ask a new hire whether they received a benefits package presentation.

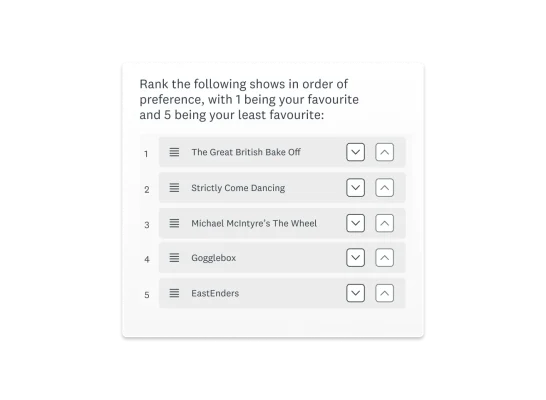

2. Ranking questions

Ranking questions ask respondents to organise answer choices in order of preference. Ranking questions can be a fun and interactive activity. They can also be used to gather insights for niche needs.

A product manager may use a ranking question to ask customers to rank product features. Survey responses can help product managers to prioritise feature updates.

3. Semantic differential scale questions

A semantic differential scale question asks respondents to rate their attitude towards a topic. The two end points of the scale are opposites.

For example, if a product usability question asks “How easy was it to use this product feature?”, then the two answer end points would be “very easy” and “very difficult”.

4. Matrix questions

Matrix questions have the same response options in a row. Likert scale questions or rating scale questions work well as matrix questions.

For example, a customer experience professional may ask whether, on a scale of “very satisfied” to “very dissatisfied”, customers are satisfied with the product, customer support team and onboarding.

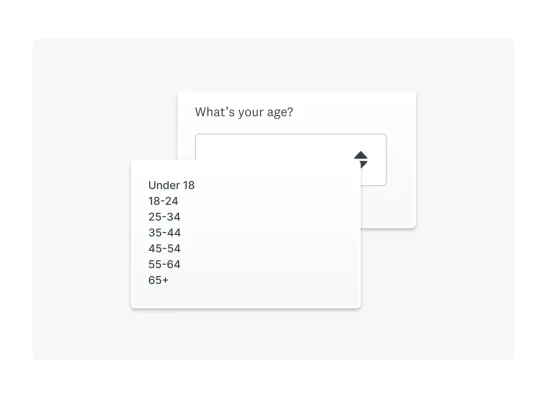

5. Dropdown questions

Dropdown questions are closed-ended, multiple choice questions that allow for a long list of responses. You should bear in mind the fact that a long list of dropdown answers may not display well on mobile devices.

6. Slider questions

Slider questions are interactive, quantitative survey questions. Respondents answer questions according to a numerical scale, making it easy for survey-makers to aggregate and analyse data.

7. Image choice questions

Image choice questions allow respondents to select images as answers. This type of question works really well when you want respondents to evaluate visual qualities. For example, you can use this question for logo testing and user interface testing.

8. Constant sum questions

Constant sum questions require respondents to divide a specific number of points or percentages as part of a total sum.

For example, a survey question may ask the following: “Using 100 points, please apply a number of points to each factor based on how important each is to you when using our product.”

9. Side-by-side questions

Side-by-side questions enable you to ask multiple questions in a condensed format. Like matrix questions, this type of question will enable you to evaluate several aspects of a subject in a straightforward format.

10. Star rating questions

Star rating questions are another way to enable respondents to evaluate a statement according to a visual scale. The scale comprises stars, hearts, thumbs, smiles or other niche visuals that help survey-makers to measure respondent sentiment. Whichever visual you use, each is assigned a weight so that a quantitative score can subsequently be aggregated.

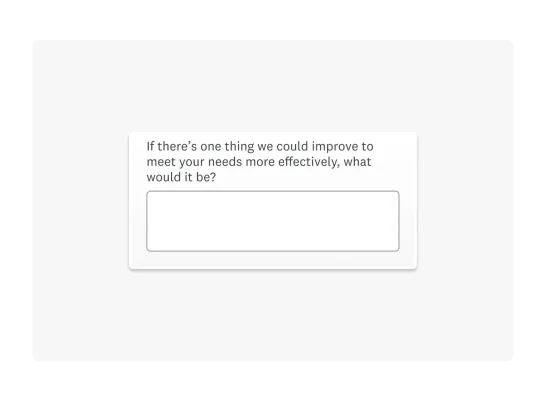

11. Open-ended questions

Do you want to hear from survey respondents in their own words? If so, you’ll need an open-ended question requiring respondents to type their answers into a text box instead of choosing from preset answer options.

Since open-ended questions are exploratory, they invite insights into respondents’ opinions, feelings and experiences. Good open-ended questions will often explore all three and serve as follow-ups to previous closed-ended questions.

12. Multiple choice questions

Multiple choice questions are the most popular survey question type. They allow your respondents to select one or more options from a list of answers that you define. They’re intuitive, help produce easy-to-analyse data and provide mutually exclusive choices. Because the answer options are fixed, your respondents will have a more effortless survey-taking experience.

13. Likert scale questions

Likert scales are a specific type of rating scale. They’re the “agree or disagree’’ and “likely or unlikely” questions that you often see in online surveys. They’re used to measure attitudes and opinions. They go beyond the simpler “yes”/“no” question, using a five- or seven-point rating scale that goes from one extreme attitude to another.

For example:

- Strongly agree

- Agree

- Neither agree nor disagree

- Disagree

- Strongly disagree

Tips for writing good survey questions

You already know that good survey questions are clear, neutral, unbiased and relevant. But the question remains: how do you write good survey questions? Here are five tips that you can use right now to write good survey questions for accurate data collection.

Avoid leading questions

Avoid leading questions that encourage respondents to give a specific answer.

You know the type: “Since you love our product so much, how likely is it that you would recommend it to a friend?”; or “Tell us about how the conference changed your professional life.”

These survey questions assume the respondent’s experience, inserting bias into the question.

Use simple language

Use language that your respondents understand, and save the jargon for your colleagues.

Think of it this way: how do you expect respondents to respond to the best of their ability when they don’t understand the question? Of course, respondents have the option to follow up or do their own research. However, it’s more likely that they’ll fill in a random answer or abandon the survey, which will impair the accuracy of your data.

Simplify your survey question language. And if you must use industry-specific language, define it for the respondents.

Pretest and refine questions

- Pretesting: Test your survey questions on a small group of respondents to ensure that they are clear and effective. Pretesting will help you to identify any issues or biases in your questions before the survey is distributed to a larger audience. This step is crucial for refining your questions and improving the overall quality of your survey.

- Refining: Based on the feedback that you receive from pretesting, you should refine your survey questions to ensure that they are clear, specific and neutral. Make any necessary changes to improve the clarity and effectiveness of your questions. This iterative process helps to create a more reliable and accurate customer satisfaction survey.

Ask one thing at a time

Double-barrelled questions, or questions that ask for a respondent’s opinion about two things at the same time, are a recipe for confusion and flawed data.

An example of this is the question “How would you rate our customer service and product reliability?”. This combines customer satisfaction and product usability questions. It is not clear which question the respondent should answer.

This may result in the respondent skipping the question, providing an answer that doesn’t reflect their true opinion or abandoning the survey altogether.

To avoid double-barrelled questions, you should refer to your research objective and ensure that the question aligns with your research question. You should also distribute your survey to colleagues before you send it to your target audience because they can help to identify issues that may not be evident to you.

Ensure clarity, specificity and neutrality

- Neutrality: Ensure that your survey questions are neutral and do not influence respondents’ answers. Avoid using leading questions or assumptions that may bias respondents’ responses. For example, instead of asking, “How much do you love our product?”, you could ask “How satisfied are you with our product?”. Neutral questions will help you to gather unbiased and honest feedback.

- Clarity: Ensure that your survey questions are clear and easy to understand. You should avoid using jargon or technical terms that may confuse respondents. For example, instead of asking “How would you rate the UX of our product?”, you could ask “How easy is it to use our product?”. Clear questions help respondents to provide accurate answers, resulting in more reliable data.

- Specificity: Make sure that your survey questions focus on a particular aspect of customer satisfaction. The use of vague questions may lead to ambiguous answers that are difficult to analyse. For instance, instead of asking “How do you feel about our service?”, you could ask “How satisfied are you with the response time of our customer service team?”. Specific questions, like this one, provide more precise insights into customer satisfaction.

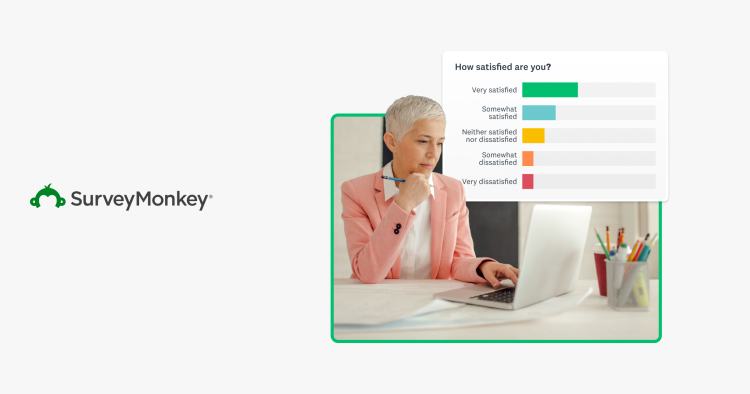

Create effective surveys with SurveyMonkey

Good survey questions are key to effective market research. The right questions will solicit actionable data that puts product development, marketing campaigns and CX initiatives firmly on the path to success.

The good news is that you don’t have to be a survey expert to write survey questions. SurveyMonkey has 400+ expert-written survey templates and prebuilt forms that you can customise to suit your specific requirements.

NPS, Net Promoter and Net Promoter Score are registered trademarks of Satmetrix Systems, Inc., Bain & Company and Fred Reichheld.